|

I am a member of technical staff at Generalist AI. I graduated from MIT EECS, working with Julie Shah on human robot interaction, specifically, inference-time policy steering. Before MIT, I studied robotics at Northwestern and physics at Middlebury. Outside research, I enjoy theatre and backpacking. I thru-hiked PCT in 2019. Email / Google Scholar / LinkedIn / CV / X / PhD (Thesis / Talk)

|

|

Imagine driving with Google Maps, where multiple routes unfold before you. As you take turns and change plans, it adapts instantly recalculating to match your shifting preferences. My research goal is to bring this level of interactivity to multimodal embodied AI, empowering humans to steer pre-trained foundation models at inference-time. For video explainers, check out my MIT Student Spotlight (more technical) or Bloomberg News (more accessible). |

|

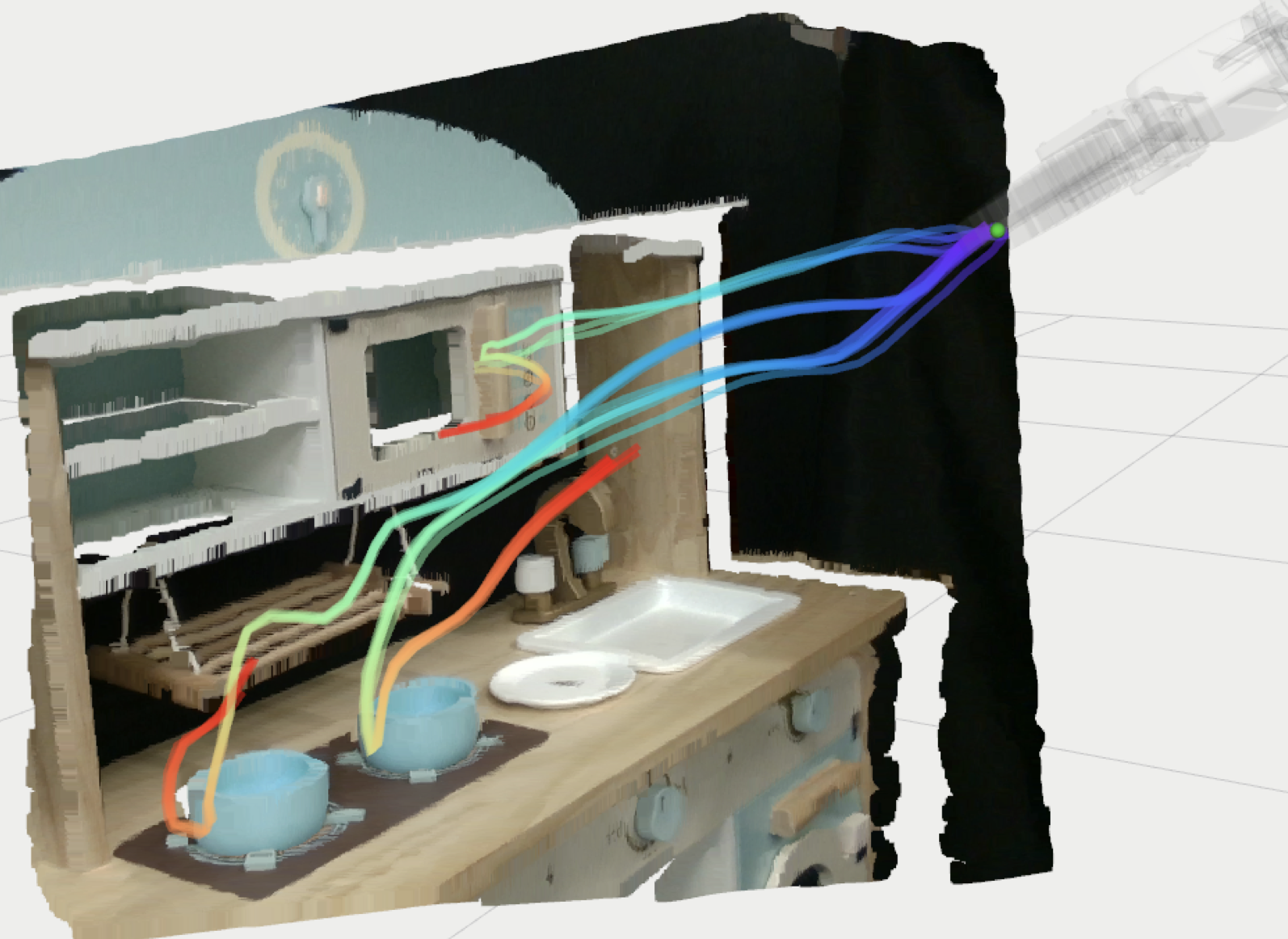

Yanwei Wang, Lirui Wang, Yilun Du, Balakumar Sundaralingam, Xuning Yang, Yu-Wei Chao, Claudia Perez-D'Arpino, Dieter Fox, Julie Shah arxiv / code / project page / twitter / MIT News ICRA 2025 We propose Inference-Time Policy Steering (ITPS), a framework that leverages human interactions to zero-shot adapt pre-trained generative policies for downstream tasks without any additional data collection or fine-tuning. |

|

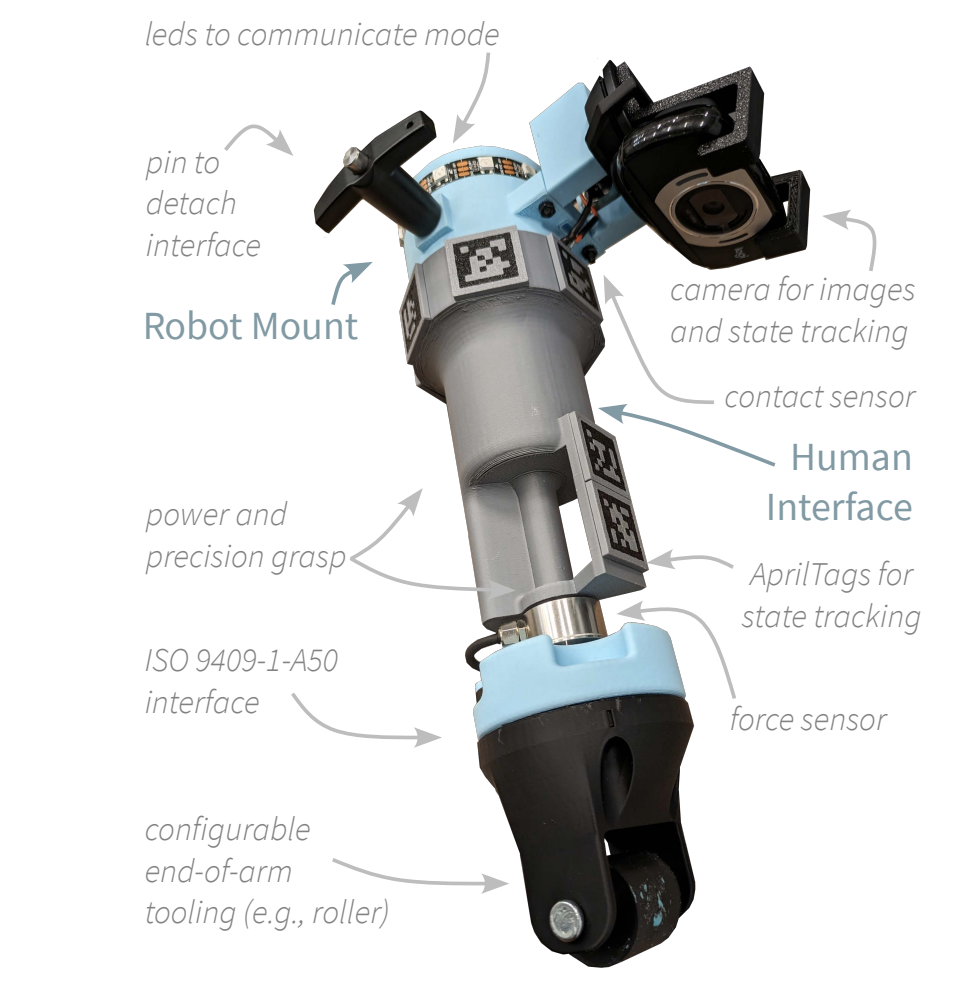

Michael Hagenow, Dimosthenis Kontogiorgos, Yanwei Wang, Julie Shah arxiv IROS 2025 We present the Versatile Demonstration Interface (VDI), a collaborative robot tool designed to enable seamless transitions between data collection modes—teleoperation, kinesthetic teaching, and natural demonstrations—without the need for additional environmental instrumentation. |

|

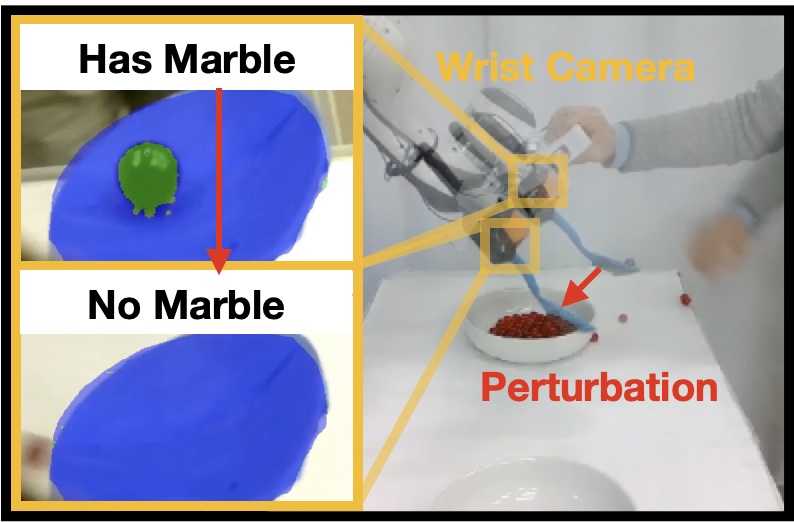

Yanwei Wang, Tsun-Hsuan Wang, Jiayuan Mao, Michael Hagenow, Julie Shah arxiv / code / project page / MIT News / techcrunch ICLR 2024 (Spotlight) This work learns grounding classifiers for LLM planning. Our end-to-end explanation-based network is trained to differentiate successful demonstrations from failing counterfactuals and as a by-product learns classifiers that ground continuous states into discrete manipulation mode families without dense labeling. |

|

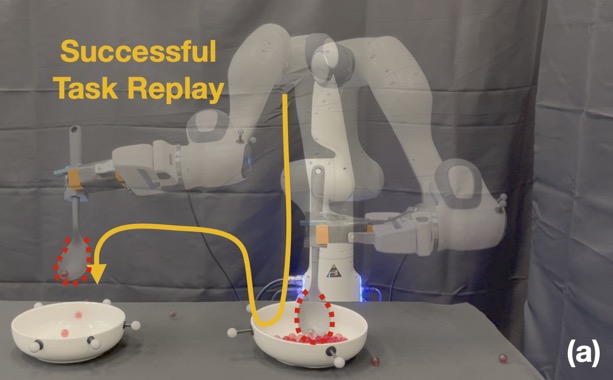

Yanwei Wang, Nadia Figueroa, Shen Li, Ankit Shah, Julie Shah arxiv / code / project page / PBS News CoRL 2022 (Oral) IROS 2023 Workshop ( Best Student Paper, Learning Meets Model-based Methods for Manipulation and Grasping) We present a continuous motion imitation method that can provably satisfy any discrete plan specified by a Linear Temporal Logic (LTL) formula. Consequently, the imitator is robust to both task- and motion-level disturbances and guaranteed to achieve task success. |

|

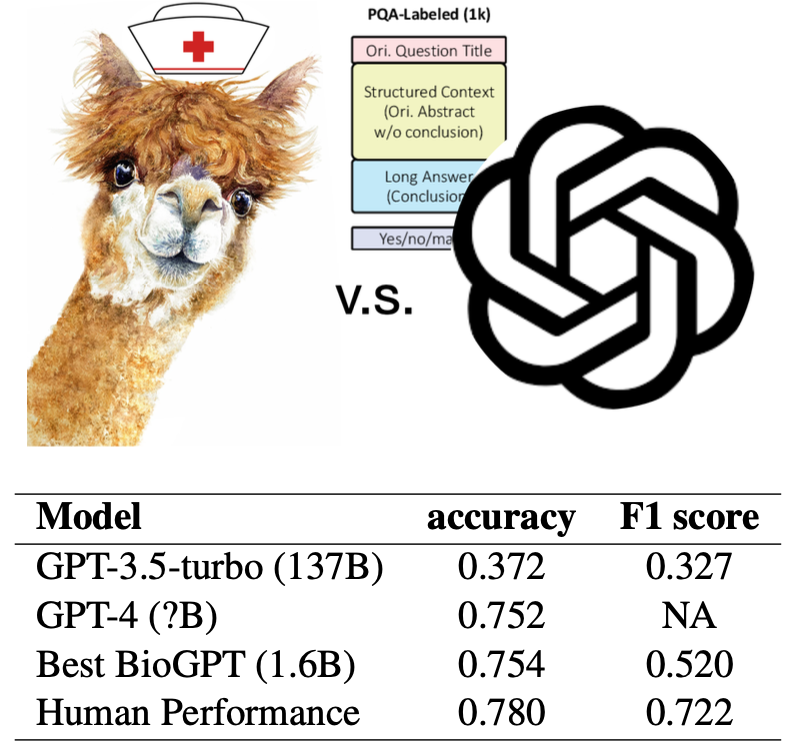

Zhen Guo, Yanwei Wang, Peiqi Wang, Shangdi Yu arxiv KDD 2023 (Foundations and Applications in Large-scale AI Models Pre-training, Fine-tuning, and Prompt-based Learning Workshop) We prompt large language models to augment a domain-specific dataset to train specialized small language models that outperform the general-purpose LLM. |

|

Yanwei Wang, Ching-Yun Ko, Pulkit Agrawal arxiv / code / project page IROS 2023 NeurIPS 2022 Workshop (Synthetic Data for Empowering ML Research / Self-Supervised Learning) By learning how to pan, tilt and zoom its camera to focus on random crops of a noise image, an embodied agent can pick up navigation skills in realistically simulated environments. |

|

Nadia Figueroa, Yanwei Wang, Julie Shah We installed an interactive exhibition at MIT Museum that allows non-robot-experts to teach a robot an inspection task using demonstrations. The robustness and compliance of the learned motion policy enables visitors (including kids) to physically perturb the system safely 24/7 without losing a success gaurantee. |

|

|