Abstract

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

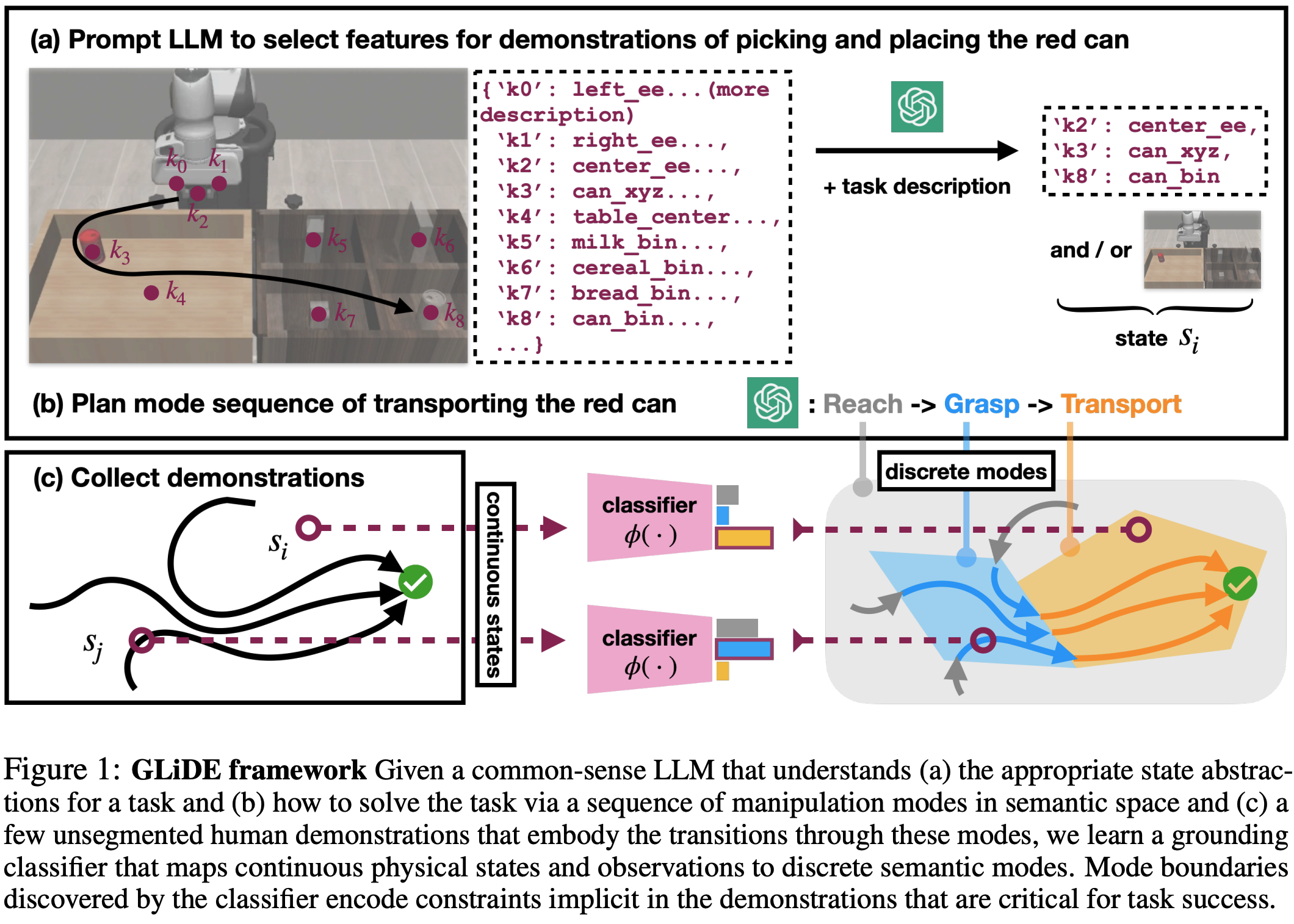

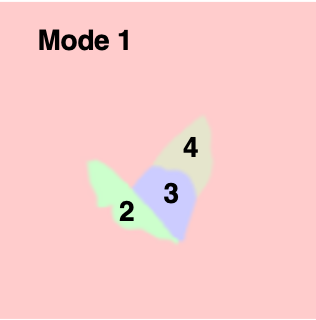

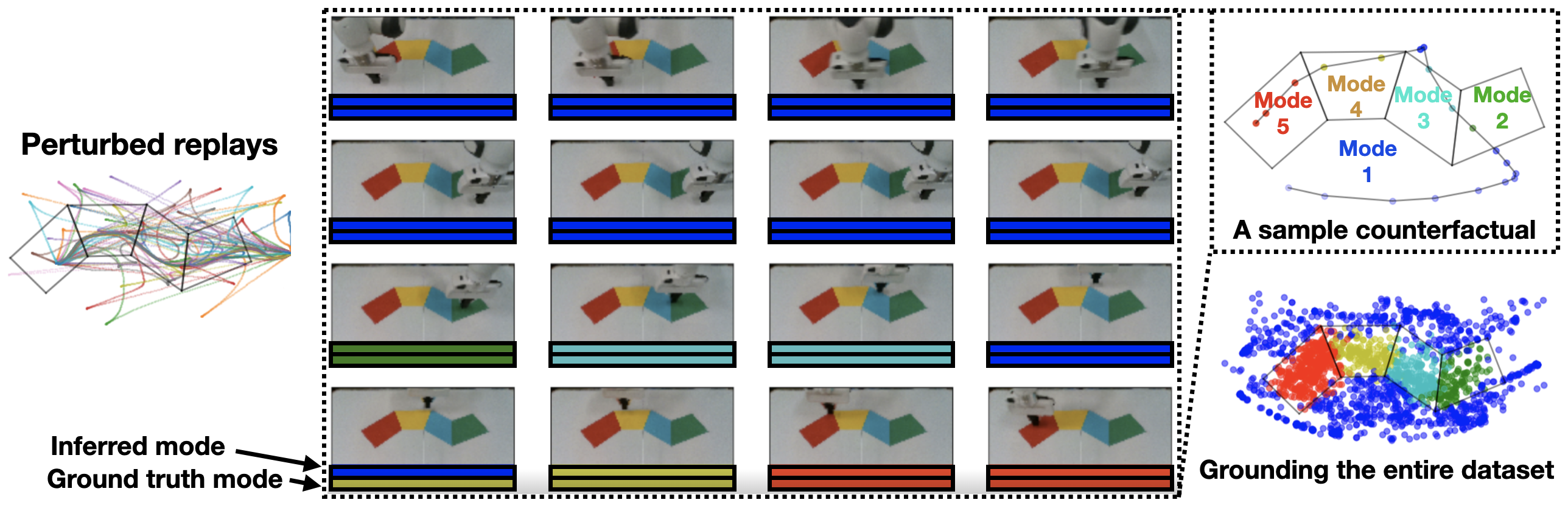

(a) Demonstrations and ground truth modes |

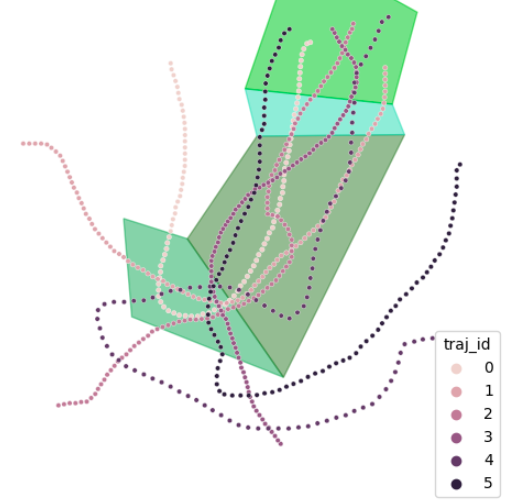

(b) Grounding learned by GLiDE |

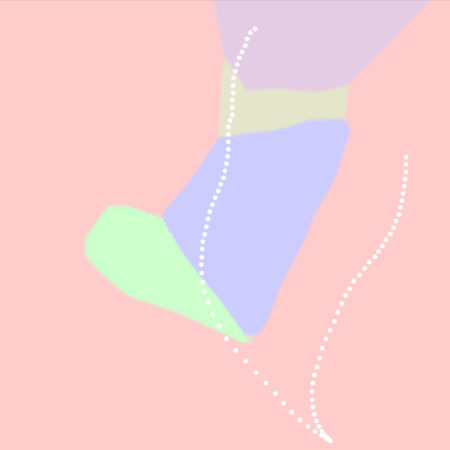

(c) GLiDE without counterfactual data |

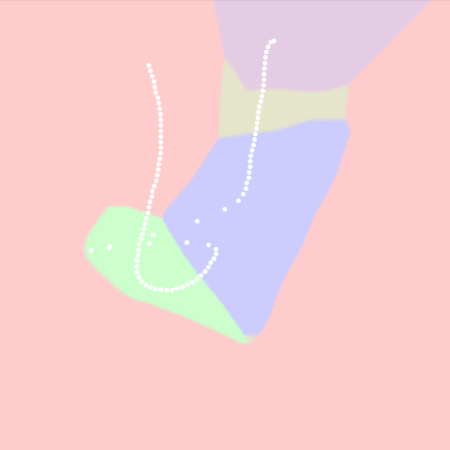

(d) GLiDE with incorrect number of modes |

(e) Baseline: similarity-based clustering |

|

|

|

|

|

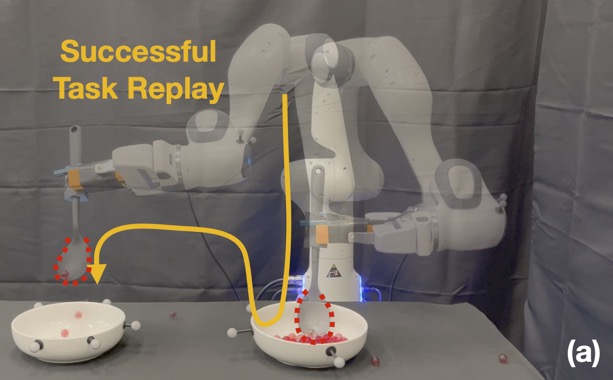

Successful Execution 1 |

Successful Execution 2 |

Failing Counterfactual 1 |

Failing Counterfactual 2 |

|

|

|

|

Planning without learned grounding leads to failures due to invalid mode transitions |

Planning with learned grounding leads to successful recovery that obeys mode constraints |

|

|

|

|

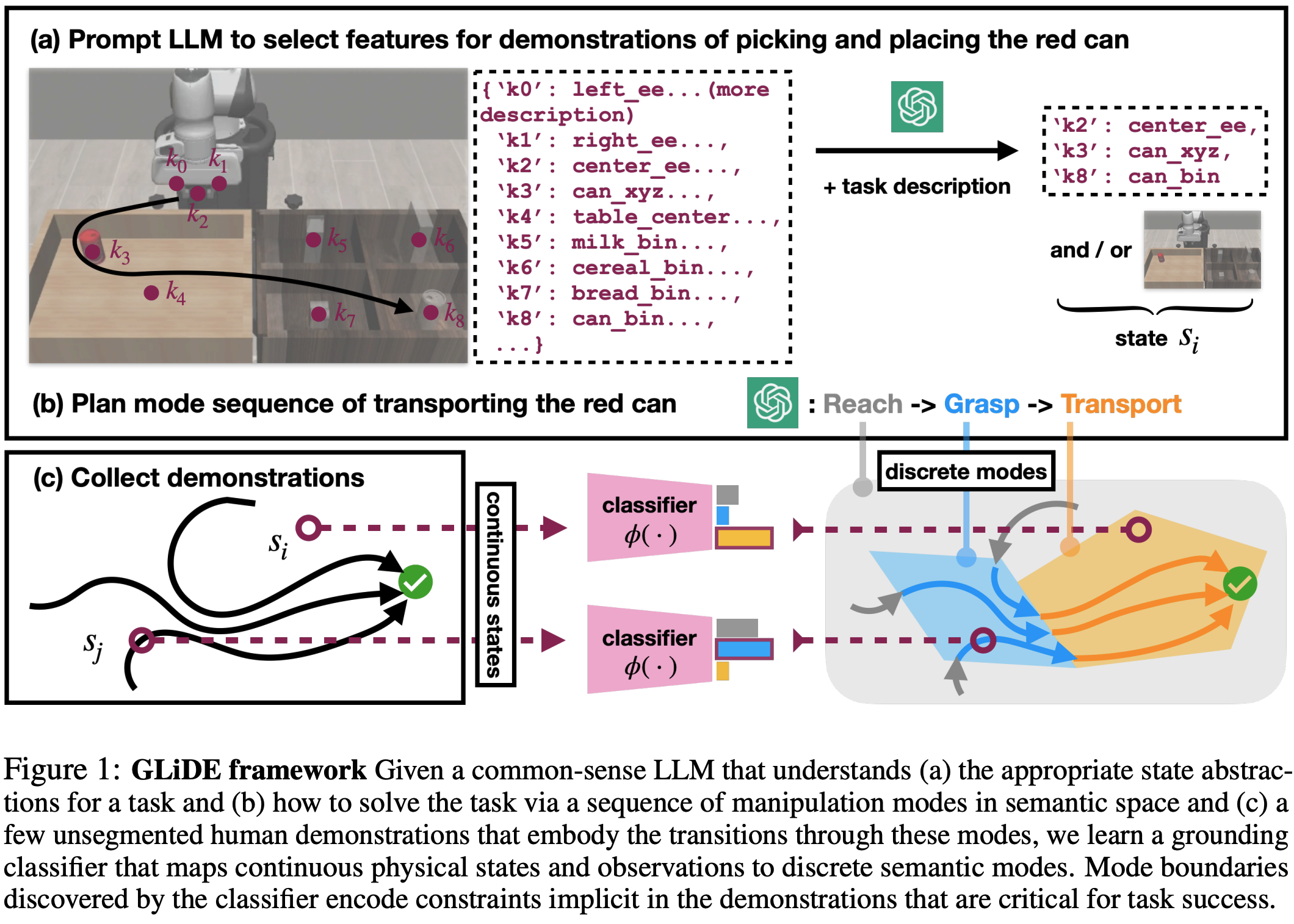

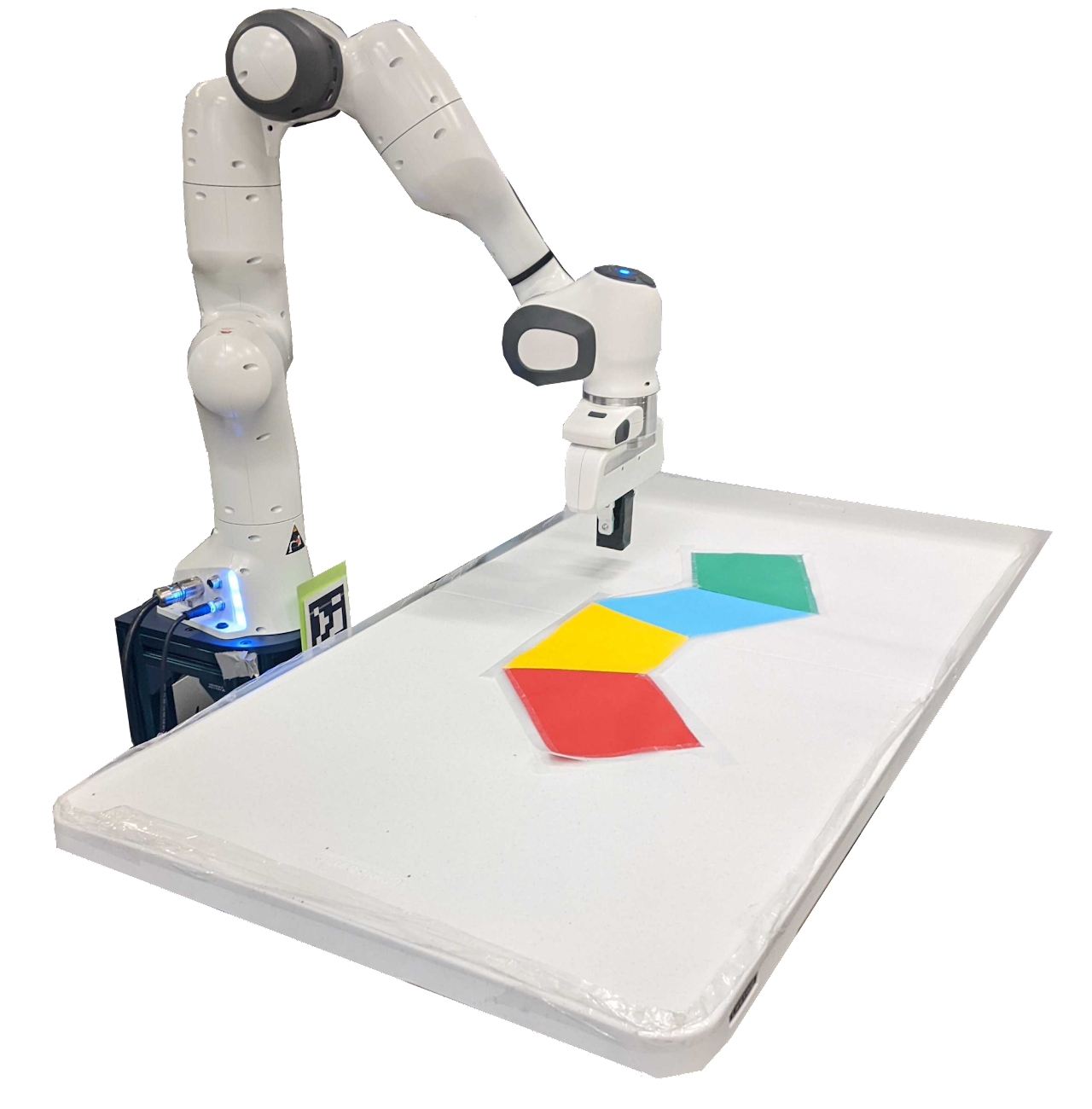

Data Collection Setup |

Human demonstrations: Mode 1 -> 2 -> 3 -> 4 -> 5. Invalid mode transitions: 1 -> 3 or 1 -> 4 or 1 -> 5 |

Add synthetic perturbations to demonstration replays with auto-labeling and auto-reset |

|

|

|

|

|

Naive policy: without knowledge of the modes, the robot simply continues after perturbation which can lead to invalid mode transitions. |

Mode-conditioned policy: By accurately recognizing the underlying modes, our method allows for recovery when perturbations cause unexpected mode transitions. |

|

Learned Mode Segmentation of Demonstrations for Robosuite Manipulation Tasks

|

|

|

(Left) We manually set a ground truth based on RoboSuite state information to

benchmark our learned mode segmentation, visualized in the left figure. Can Task: pick up the can and place it in the bottom-right

bin. Lifting Task: pick up the block above a specified

height. Square Peg Task: pick up the square nut and place it

on the square peg. You can view pictures of all of the classification results here.

(Below) To show the learned grounding can facilitate planning using LLMs, we

apply perturbations to Behavioral Cloning (BC) rollouts below. BC performance drops off

significantly with perturbations, but our method generally recovers better after the robot drops

the can as the robot will be directed to pick up the can again.

|

|

Learned Grounding facilitates replanning with LLMs to improve execution success rate for RoboSuite Tasks

|

||

|

|

|

|

|

Behavior cloning (BC) rollouts: 93% success

|

BC rollouts (perturbed): 20% success

|

Mode-conditioned BC (perturbed): 40% success

|

|

|

|

|

Mode-agnostic BC cannot recover from task-level perturbations (e.g. dropping all marbles) |

Mode-conditioned BC enabled by the learned grounding can leverage LLM to replan |

|

Yanwei Wang, Nadia Figueroa, Shen Li, Ankit Shah, Julie Shah arxiv / code / project page CoRL 2022 (Oral, acceptance rate: 6.5%) IROS 2023 Workshop ( Best Student Paper, Learning Meets Model-based Methods for Manipulation and Grasping Workshop) We present a continuous motion imitation method that can provably satisfy any discrete plan specified by a Linear Temporal Logic (LTL) formula. Consequently, the imitator is robust to both task- and motion-level disturbances and guaranteed to achieve task success. |