Abstract

|

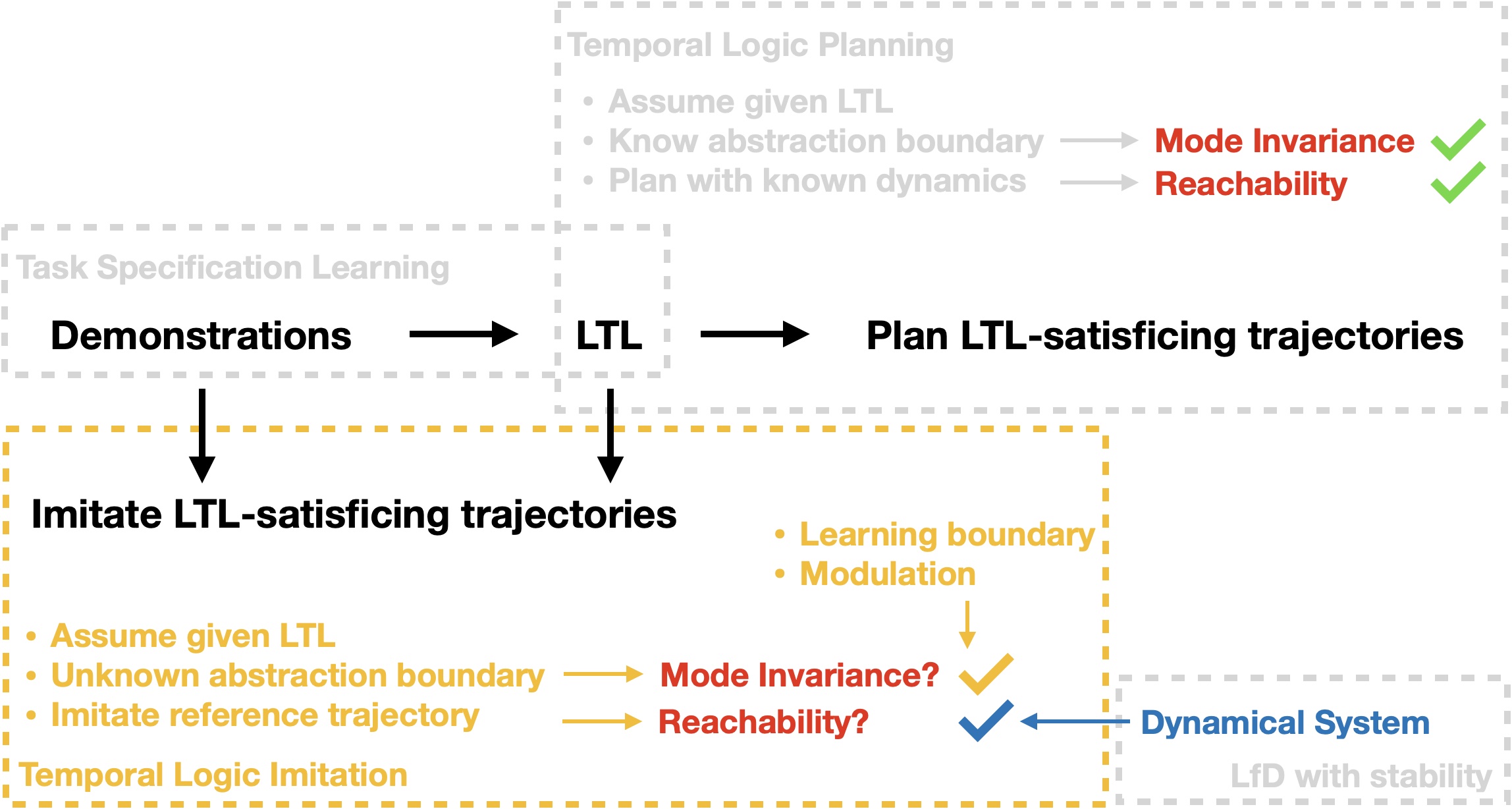

Generically Learned Motion Policy / Motion Policy with Stability Guarantee

|

|

|

Motion Policy without Mode Invariance / with Mode Invariance

|

|

|

Iterative Boundary Estimation of Unknown Mode with Cutting Planes

|

|

|

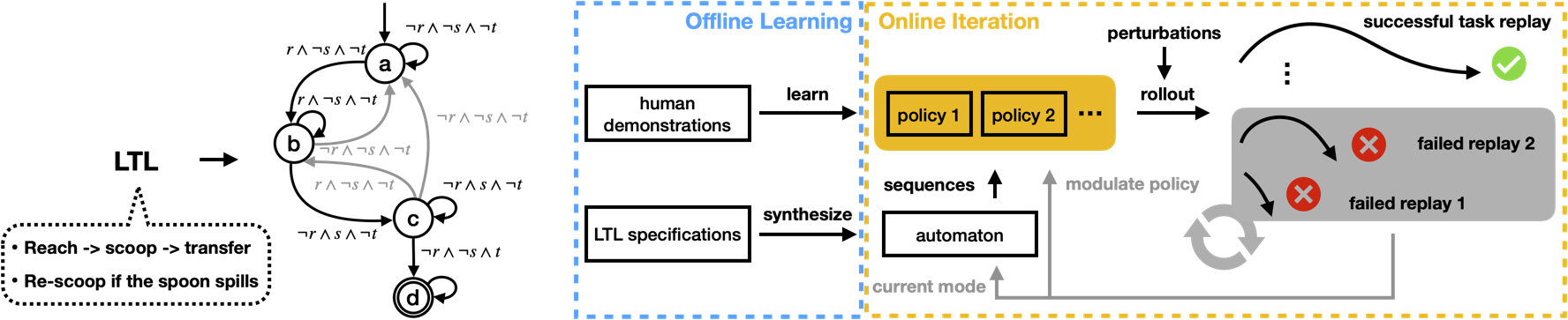

To modulate motion policies so that they become mode-invariant,

unknown mode boundary is first estimated. Invariance failures detected by sensors

are used to find cutting planes that bound the mode within which DS flows are modulated

to stay.

Note flows that have left the mode will re-enter the mode due to LTL's reactivity,

and iteratively increasingly better boundary estimation is attained.

|

|

|

Generalization to New Tasks by Reusing Learned Skills

|

|||

|

Line Inspection Task

|

|||

|

Color Tracing Task

|

|||

|

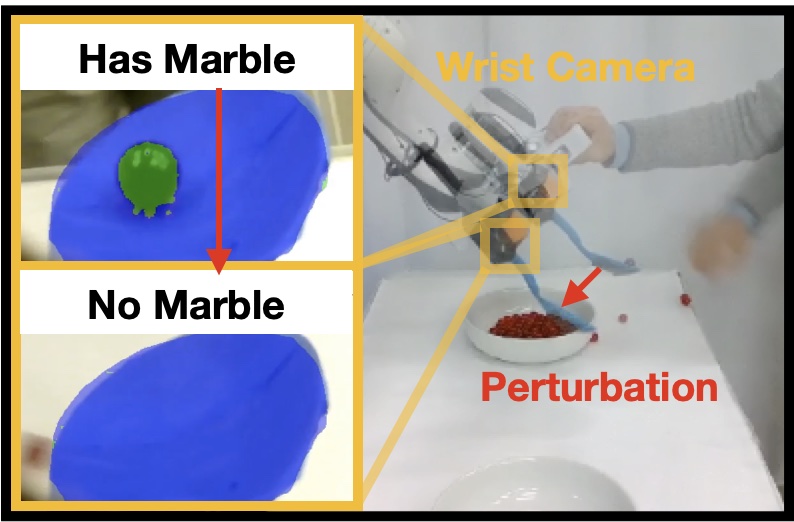

Scooping Task

|

|||

| A permanent interactive exhibition at MIT museum of programming robots via demonstrations |

|

Yanwei Wang, Tsun-Hsuan Wang, Jiayuan Mao, Michael Hagenow, Julie Shah arxiv / code / project page ICLR 2024 (Spotlight, acceptance rate: 5%) This work learns grounding classifiers for LLM planning. By locally perturbing a few human demonstrations, we augment the dataset with more successful executions and failing counterfactuals. Our end-to-end explanation-based network is trained to differentiate successes from failures and as a by-product learns classifiers that ground continuous states into discrete manipulation mode families without dense labeling. |